Strategies for getting feedback on your documentation

Most documentation feedback arrives too late. After users already struggled.

You publish documentation. Users struggle with it, give up or contact support. Eventually someone tells you the docs were confusing, but by then dozens of users have already hit the same problem.

By then, dozens of users hit the same problem.

Here are strategies for catching documentation issues earlier, before publishing and continuously after. Ordered by importance.

Strategy 1: Pre-publication review

Get feedback before documentation goes live.

The approach:

Get up to three reviewers with different perspectives:

A subject matter expert (catches technical errors)

A technical writer (checks clarity and style)

Someone without context (tests if it actually works)

You don’t need all three. Even one reviewer helps. The key: tell them what to focus on. “Check technical accuracy” is clearer than “review this.”

When this works:

This strategy works when reviewers are available and you’ve allocated time for reviews.

When this fails:

Tight deadlines kill this strategy. So do unavailable reviewers. And if your documentation changes frequently, reviews become a bottleneck.

Tradeoff:

Reviews add time to the publishing process, but they catch major issues before users see them. Schedule this review time upfront because teams always forget it in estimates.

Not everything needs the same level of review. For example, new tutorials could deserve thorough review. A typo fix in a reference page? Quick scan is fine. Save your reviewers’ time for what matters.

Priority: High. Preventing issues is cheaper than fixing them after users complain.

Strategy 2: Monitor communication channels

Watch where users discuss your documentation.

The approach:

Monitor support tickets, forums, GitHub issues, social media, internal Slack. Look for patterns like:

“The docs don’t explain X”

“I couldn’t figure out how to Y”

“Spent 2 hours on Z before...”

When you spot problems, create documentation issues from them. Track them and fix them.

When this works:

Always. This should be baseline. Users talk about your documentation whether you listen or not.

When this fails:

Low-traffic products don’t generate enough signal. Very new documentation hasn’t had time for users to hit issues yet.

Tradeoff:

Monitoring requires active effort, but the feedback is unfiltered. Users aren’t trying to be nice, they’re venting or asking for help, which makes it valuable.

Priority: High. Always useful, and should be happening regardless of other strategies.

Strategy 3: On-page feedback widget

Put a feedback mechanism directly on the documentation page.

The approach:

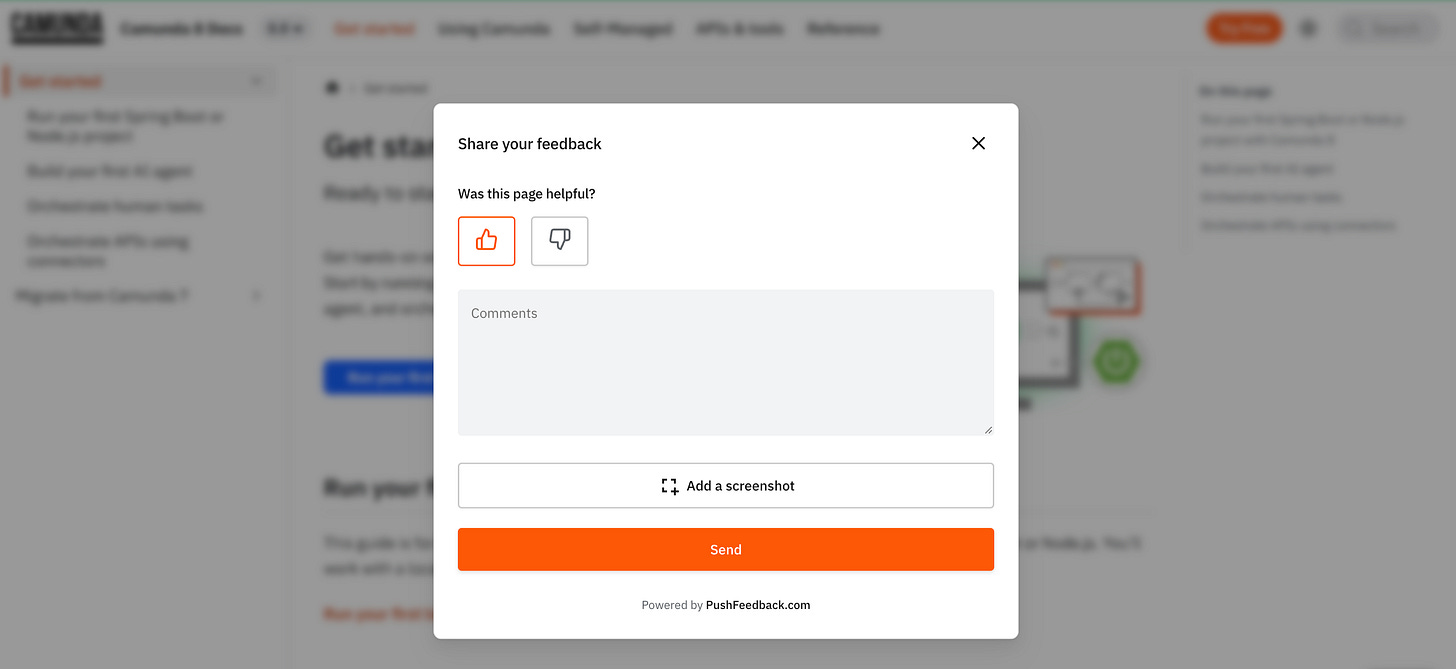

Add a feedback button to the page. Users can click it, highlight a section, and say what’s wrong. It’s anonymous, requires no account, and feedback routes directly to your issue tracker.

Tools like PushFeedback let users annotate specific sections visually. They see the issue, highlight it, leave a comment. You get a screenshot with context.

Similarly, if you have an AI chatbot on your docs, review what users ask it. Look for questions the chatbot couldn’t answer (missing documentation), questions asked repeatedly (unclear documentation), and questions that should be findable through search (navigation problems).

Chatbot queries reveal what users are trying to do, not what they think you want to hear. Some teams use these queries to prioritize new documentation. If 50 users ask about webhook security in a month, write that guide.

When this works:

This strategy shines for external documentation with traffic.

When this fails:

Internal docs where everyone already uses Slack or GitHub issues to open issues. Low-traffic documentation (not enough signal).

Tradeoff:

Feedback widgets generate noise. You’ll get “this sucks” comments with no detail. But you’ll also catch issues you’d never discover otherwise, so the noise is worth it.

Alternative:

You can link to GitHub issues instead. This works for developer-heavy audiences familiar with GitHub and costs nothing (no widget). But most users won’t do it because it requires leaving the page, creating an account, and giving public feedback.

Priority: Medium. Scales well. Captures feedback you wouldn’t get otherwise.

Strategy 4: User testing sessions

Watch someone use your documentation in real-time.

The approach:

Don’t explain anything. Don’t help them. Just watch where they pause, re-read, or give up, and take notes on what you see.

When this works:

User testing sessions work well for complex workflows, multi-step tutorials, and onboarding documentation. You need access to users willing to participate.

When this fails:

Simple reference docs have nothing to “test.” And if users won’t give you time, this strategy is dead.

Creative variation:

We helped Finboot ran an “Escape Room” using their docs. Company split into teams.

The challenge was to solve a puzzle by following documentation. Send blockchain transactions in correct sequence.

Everyone experienced the documentation pain points firsthand and spotted inconsistencies, ambiguous instructions, and missing steps. The whole company became motivated to fix it.

Tradeoff:

User testing requires coordination, but it makes abstract documentation problems concrete.

Priority: Medium. Intensive but valuable for complex documentation.

Strategy 5: User interviews

Talk to users who recently used your documentation.

The approach:

Schedule time with users who recently used your docs. Ask what worked and where they got stuck. Listen to their experience.

When this works:

Interviews work best for internal documentation where you have easy access to users. They’re great for B2B products where you know your users, and especially valuable after major releases when users are actively engaging with your docs.

When this fails:

External users at scale are hard to schedule. Anonymous user bases can’t be reached. And if you need volume, interviews don’t scale well enough.

Tradeoff:

Interviews are time-intensive, but they reveal things surveys miss. You’ll learn about the specific sentence that confused them or the assumption they made that broke everything.

Priority: Medium. High value when you can do it. But doesn’t scale.

Strategy 6: Surveys

Capture general sentiment about documentation.

The approach:

Ask broad questions, like:

How helpful is our documentation? (1-10)

What topics are missing?

How can we improve?

When this works:

Surveys work for measuring overall documentation health, identifying gaps (missing topics), and getting directional feedback at scale.

When this fails:

Surveys fail at finding specific bugs, understanding why users struggle, and identifying which pages are broken. Users don’t remember which specific guide they read two weeks ago.

Tradeoff:

Surveys are easy to deploy and give you trends, but they don’t give you actionable issues. You’ll learn “documentation could be clearer” but not which paragraph confused people.

Priority: Low. Good for measuring health. Not for finding specific problems.

Pick your strategy

Most teams start with:

1. Pre-publication review (prevent issues)

2. Monitoring (baseline, always useful)

3. Add other strategies based on constraints (budget, team size, user base, and how much traffic your docs get)

Your documentation improves when you stop waiting for complaints and start actively seeking problems.